What Happened to Stack Overflow in 2014?

(Originally published on meta.stackoverflow Stack Exchange by Jon Ericson.)

Shog suggests there is a natural equilibrium between askers and answerers. My gut reactions were:

~8k questions a day is pretty remarkable given the site’s humble, even naive, beginnings. So much has been done to help the whole operation scale and yet sometimes the works get jammed up. Regular users don’t often see that flags (3.3m so far) require constant attention from a relatively small squad of moderators. It would be even more remarkable if the whole operation were not implicitly rate limited somehow.

The explanation is not exactly satisfying.

Mechanically, the number of answers on a site can be described as:

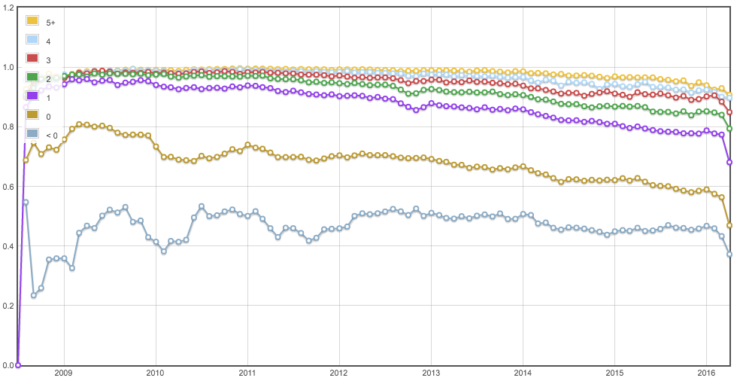

answers = questions * answers_per_questionSince we optimize for pearls, it’s really the answers that are important. If you input a junky question, the best you can hope for is no answers at all. And, in fact, questions with lower scores are less likely to be answered:

This chart shows the rate questions are answered by ask date. The downward trend is partially an artifact of it taking time to answer difficult questions: as time goes by, the odds a question with a score of 5+ getting answered approaches unity. There might also be an increased reluctance to answer questions, but it’s hard to tease out of the data without analysing timing of votes and answers. For our purposes, however, the important point is to notice the answer gap between positive, zero and negative question scores.

So far, the equilibrium theory seems plausible. Questions that are unwelcome (as indicated by downvotes) are less likely to be answered. When users’ questions are not answered, they are less likely to return to ask more. Roughly half of downvoted questions are never answered and eventually deleted.

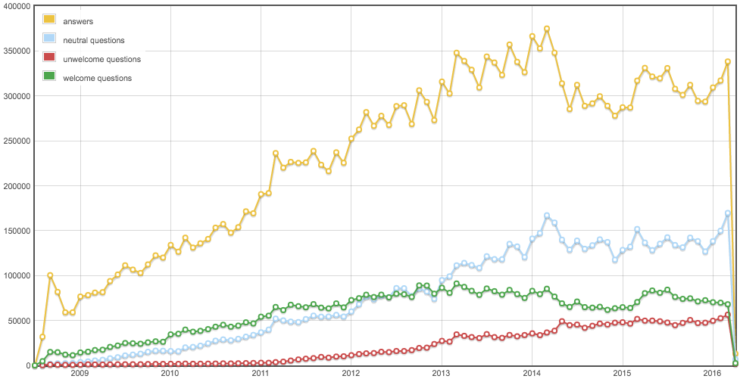

The trouble, at least when it comes to 2014, is that unwelcome questions (i.e., those with a negative score) have been increasing steadily since at least 2011, if not beta:

In economics, there’s a concept called price insensitivity in which products can be sold for increasing prices without decreasing demand. High end fashion, for instance, tends to be bought by people who don’t pinch pennies. Drugs (both the ones that correct health problems and the addictive type) can also be sold for higher prices without hurting sales if their effects can not be duplicated by a cheaper product. Then there’s See’s Candy, which raises price per pound each year and I, along with thousands of other loyal customers, buy roughly the same amount. My guess is that Stack Overflow’s reputation for quality answers encourages people to ask questions even in the face of downvotes and silence.

Instead the decreased activity seems correlated to a decrease in questions getting upvotes and a corresponding increase in questions not getting votes at all. It’s difficult to infer causation and I see two possible theories:

Starting around 2013 and peaking around March, 2014, people began asking fewer interesting questions. That lead to a decrease in voting on questions and fewer answers being given. Since the feedback on these uninteresting questions was discouraging, people began asking fewer questions on the whole. Meanwhile, truly poor questions continued being asked with little regard to negative feedback.

Stack Overflow users began noticing increasing numbers of truly awful questions and decided, rightly, that downvoting and refusing to answer them is the best remedy. These questions fit broad categories of awful and users began withholding votes from questions that were not themselves awful, but bore some of the markers of awful. Fewer of these questions got answered and askers of mediocre questions did not see any point in trying to improve.

These are equally probable theories in my way of thinking. I could even see a blended theory being correct. But the evidence does not suggest that increases in awful questions is the proximate cause in decreased answering during 2014. Instead, the decrease seems to be a result of fewer welcome/upvoted questions.

Please direct comments to the original post.