Rewriting a Git repository: Dealing with distribution

This is part three of a series:1

Previously I made an analogy that Git repositories are like saving complete chess board states rather than merely saving each move as it happens. With Git it’s always possible (and quick!) to recover all previous states. Despite some clever compression techniques, that feature creates a situation where repositories grow unreasonably large if you happen to save binary files and update them frequently. Fortunately we can save these files efficiently outside of Git, as I discussed last time.

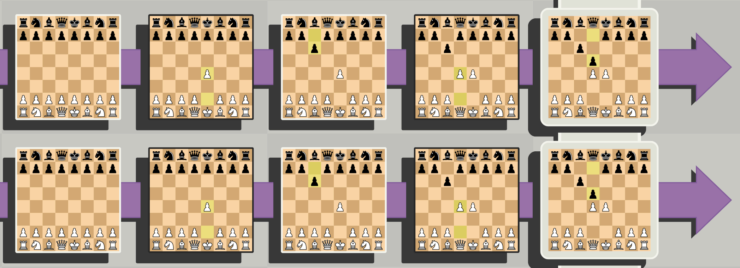

In this post I’m going to throw another difficulty in the mix: distributed Git repositories. Suppose we are storing a chess opening:

This is the main line of the Caro-Kann Defence which is the start of innumerable chess games. No doubt people were using these moves organically since the modern game of chess was invented. But after Horatio Caro published his analysis of the opening, players have been essentially making copies of that standard opening. It’s the chess version of reusing code.

Git is specifically designed to let people create copies of repositories. If anyone wants to work on the code, they simply clone the repository on their own machine. Perhaps more frequently, Git facilitates cloning a complete copy on a virtual machine, which might be hosted anywhere (including a volume on your local machine). By default, cloning produces a new repository identical to the original:

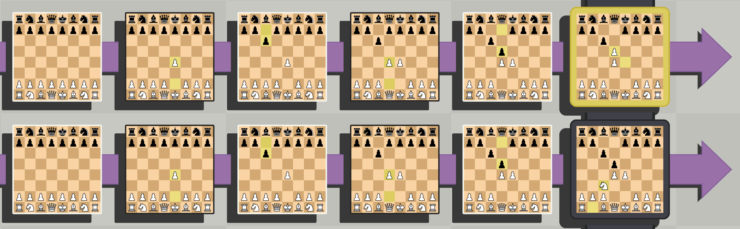

People use chess openings to save time and avoid doing complicated analysis of the early moves. The real game doesn’t start until one of the players diverges from the main line and makes a less common move. It doesn’t take long for a game to be unique in the annals of chess after that point. Playing out a new set of moves makes chess exciting.

GitHub allows forking a repository so people can make changes that may or might not be brought back to the source repository. It’s sorta like copying a standard chess opening and adapting an “out of book” line for your own games. If the original repository changes, owners of forks can take those changes. If you want to submit a change for consideration by the original developer, the standard method is to fork a repository and ask the owner to accept a pull request with your changes. The chess analogy would be to submit an off-book line for consideration in the next edition of Modern Chess Openings (MCO).

Since it’s so easy to get a copy of a repository, there’s likely to be tons of copies of especially useful code spread around the world. The EDB Docs repository has 177 forks on GitHub and been cloned hundreds, if not thousands, of times. All of these numbers are bound to go up by the time you read this. The point is, there are many copies of the repository each of which includes the entire history of the project.

Distributed data is generally a great thing. If one copy gets

deleted, it’s handy to be able to consult another copy. Gone are the

days of shipping backup tapes to offsite storage. Just

git push to the world and git clone to restore

the backup. On the downside it’s nearly impossible to put the genie back

in the bottle once you’ve put a change into the world. At one of my

previous employers, a developer accidentally pushed hundreds of user

emails to a public repository. By the time it was discovered, the

repository had been cloned and forked several times. Removing that data

was not

at all easy.

In 1962, Fritz Leiber wrote a short story for Worlds of If Science Fiction called “The 64-Square Madhouse”2 in which a chess-playing Machine was entered in a tournament. The Machine seemed unbeatable, but one opponent discovered a strategy to win one game:

“Shut up, everybody!” Dave ordered, clapping his hands to his face. When he dropped them a moment later his eyes gleamed. “I got it now! Angler figured they were using the latest edition of MCO to program the Machine on openings, he found an editorial error and then he deliberately played the Machine into that variation!”

Of course the programmers were able to fix the error in the Machine overnight, but it’d be impossible to correct all the copies of MCO distributed around the world. And replaying all the games that went down the mistaken line? That’s another science fiction story altogether.

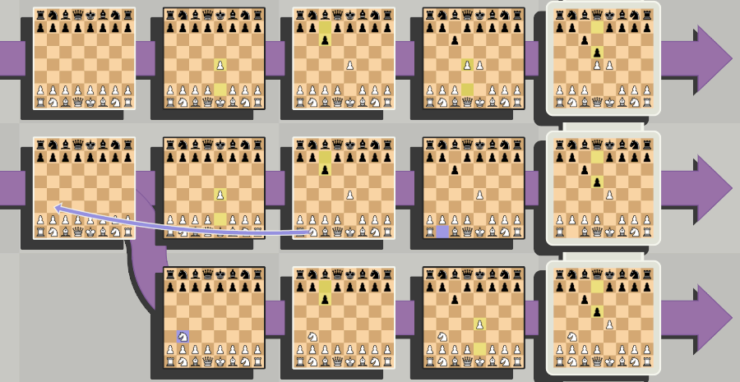

Do you remember footnote #2 from the first post in the series3 where I mentioned 5D Chess With Multiverse Time Travel? Now is the time to show its true potential since the game allows chess pieces to travel back in time. For instance, here’s game where a white knight goes back to the start of the game. In order to resolve the time travel paradox, a new timeline is created with the knight while the original timeline is left without the knight.

Not perfectly analogous to distributed source control, but you can see the trick is to hunt down and eliminate all the alternate timelines so that only the one you want to preserve is left. In 5D Chess, this is accomplished by maneuvering your opponent into a losing position which they cannot escape even using the power of time travel. With GitHub, the task can be more complicated.

Remember every clone4 has a full history of every commit

pushed to the remote repository. So removing files with

git rm doesn’t help. Even using the unholy commands to

rewrite history will only work on repositories you control. Clones and

forks you don’t control will have copies of the history you removed

which could be remerged into your GitHub repository. That’d put you in a

worse place then when you started.

Rewriting Git history, in other words, risks the equivalent of time travel paradoxes. If you’re the only person to have ever made a copy, you’ll probably be alright using one of the many available tools.5 As the only observer, the only paradoxes are philosophical. Does a memory exist when the cause of that memory has been wiped from history? But if you are on a team of people who all use the same GitHub repository, the job is more complicated.

It’s not so much a technically complicated job as socially difficult. In order to pull it off, you need to communicate with all sorts of people who may or may not care about your problems. My co-workers were motivated to help, but what about the random user who contributed a typo fix and forgot about their fork? I wrote up this set of instructions and sent out emails to everyone I had a reason to suspect had make a copy. And then I moved on content in the knowledge that only some disk space, and not the space-time continuum itself, was at risk.

I no longer work at EDB, but I found this draft and decided to finish writing it. Hopefully I remember enough to be informative!↩︎

I owe a debt of gratitude to this question and answer on Science Fiction & Fantasy—Stack Exchange for showing me that it wasn’t Asimov who wrote it. Leiber’s story was prophetic in some ways since it foresaw Deep Blue 35 years or so in advance.↩︎

You wouldn’t unless you’d just read the first post and skipped the second for some reason. It was a rhetorical question. Sue me.↩︎

Every clone, that is, that doesn’t somehow limit the depth of the clone. JourneymanGeek pointed out that you can do a shallow clone that only takes the most recent commit. Trouble is most people won’t do that or even know that it’s a possibility.↩︎

We used BFG Repo-Cleaner, but as that article suggested, I’d probably use git-filter-repo these days.↩︎